Offloading Dataverse Attachments To DMS

Introduction

File storage was always an issue when you wanted to store a lot of documents inside your environment in the past. Microsoft was very aware of that so today we have a much better situation since we don't store files in the database, but in the separate storage used only for files.

Some customers still don't want to store the files inside the Dataverse environment, but they want to access the file through the Model-Driven app. The question is how to achieve this inside Dataverse while keeping the notes UX the same. That way users will use well know notes feature to upload/download documents.

Idea

We need to modify 2 processes to achieve this solution.

- Uploading notes with attachments

- Downloading attachments

When we upload not that contains attachment we want to upload that attachment to some DMS system and free the space in our Dataverse environment so that we don't use storage twice.

On the other hand, when we want to download the attachment to our local machine we don't want to go to the DMS to do it, but we want to do it straight from the timeline where the notes are shown. We are obviously missing the link to the file in this case so we somehow need to pull the file from the DMS every time the user initiates downloading the file in Dataverse.

Solution

Uploading notes with attachments

The first issue is how to upload the attachments to DMS every time user uploads a new note. We can do that via a batch job or in a plugin, but maybe there is a better way to do that.

Doing it via batch job we don't get near-real-time uploading experience and we always need to check if there are new attachments even if there are no new ones uploaded.

Plugin on the other hand can be a problem because we maybe need to use 3rd party libraries to make our life easier or maybe upload fails and in that case it's not that easy to initiate retry logic because of the 2 minutes timeout we have in the plugin runtime.

This example will show you how to utilize the webhooks and Azure Function to do the job for you. You ask why?

Webhooks will be used because that way you can easily notify another system that a new event happened in the Dataverse. That way you don't need to check every now and then if something new arrived for sync. Plus side is also that every event holds a lot of data that will be sent via webhook. In our case, small attachments will be sent via event payload immediately and larger files will need to be fetched from the Dataverse since there is a message limit of 256kB for webhook payloads.

The handler for our webhook will be Azure Function that will handle uploading the file to the DMS system. That way we offload the upload process also from the Dataverse to the Azure where we can do a lot more things than in plugins.

All failed uploads would be handled with some fallback mechanism, which will not be included in this example, but I usually go with batch job route which will be relevant in really small number of cases.

Upload Implementation

This example will use Azure Blob storage as an DMS, but it can easily be used with any other system.

First we need to create a function that will handle note create event. Below is the function that we will use.

[FunctionName("SaveAttachmentToBlobStorage")]

public static async Task Run([HttpTrigger(AuthorizationLevel.Function, "post", Route = null)] HttpRequestMessage req, TraceWriter log)

{

try

{

var requestBody = await req.Content.ReadAsStringAsync();

var remoteContext = DataverseHelper.JsonToRemoteExecutionContext(requestBody);

var inputParameters = remoteContext.InputParameters;

var exceededHeader = req.Headers.Contains("x-ms-dynamics-msg-size-exceeded");

log.Info("Message size exceeded: " + exceededHeader);

var attachment = !exceededHeader

? (Entity)inputParameters["Target"]

: DataverseHelper.GetAttachment(remoteContext.PrimaryEntityId);

var mimetype = (string)attachment["mimetype"];

var base64Body = (string)attachment["documentbody"];

BlobStorageHelper.UploadFile(attachment.Id, mimetype, base64Body);

log.Info("File uploaded to blob storage");

DataverseHelper.ClearAttachmentContent(attachment.Id);

log.Info("Cleared attachment body in Dataverse.");

return req.CreateResponse(HttpStatusCode.Created);

}

catch (Exception ex)

{

log.Error(ex.Message, ex);

return req.CreateResponse(HttpStatusCode.InternalServerError, ex.Message);

}

}

What does this function do?

First it converts JSON from the payload to the familiar RemoteExecutionContext object with JsonToRemoteExecutionContext function.

public static RemoteExecutionContext JsonToRemoteExecutionContext(string json)

{

using (var ms = new MemoryStream(Encoding.Unicode.GetBytes(json)))

{

var serializer = new DataContractJsonSerializer(typeof(RemoteExecutionContext));

var context = (RemoteExecutionContext)serializer.ReadObject(ms);

return context;

}

}

After we have execution context we can get everything we need to start with uploading the document.

We need to check if the x-ms-dynamics-msg-size-exceeded header is set to know what to do next. Why? Webhook will not send input parameters inside the execution context if the message size is too big. If the header is sent we need to fetch the record first to proceed, otherwise we have everything we need in Target object inside input parameters.

If we need to fetch the record form Dataverse we need to get 3 fields to perform the aciton with GetAttachment function.

public static Entity GetAttachment(Guid id)

{

var service = GetService();

var attachment = service.Retrieve("annotation", id, new ColumnSet("documentbody", "filename", "mimetype"));

return attachment;

}

Next, we need to send the attachment to the DMS and in this case, we will upload it to the Blob storage with the UploadFile function.

public static void UploadFile(Guid fileId, string mimeType, string fileBody)

{

var storageacc = CloudStorageAccount.Parse(ConnectionString);

var blobClient = storageacc.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("attachments");

container.CreateIfNotExists();

var blockBlob = container.GetBlockBlobReference(fileId.ToString("D"));

blockBlob.Properties.ContentType = mimeType;

var data = Convert.FromBase64String(fileBody);

using (var contents = new MemoryStream(data))

{

blockBlob.UploadFromStream(contents);

}

}

After successful upload to the DMS we need to free up the space in Dataverse by clearing the documentbody field.

public static void ClearAttachmentContent(Guid attachmentId)

{

var service = GetService();

var updatedAnnotation = new Entity("annotation", attachmentId)

{

["documentbody"] = null

};

service.Update(updatedAnnotation);

}

If everything went OK our attachment would be uploaded in the DMS successfully and we are ready to configure the webhook that will trigger this function, but before that, we need to publish the function to Azure.

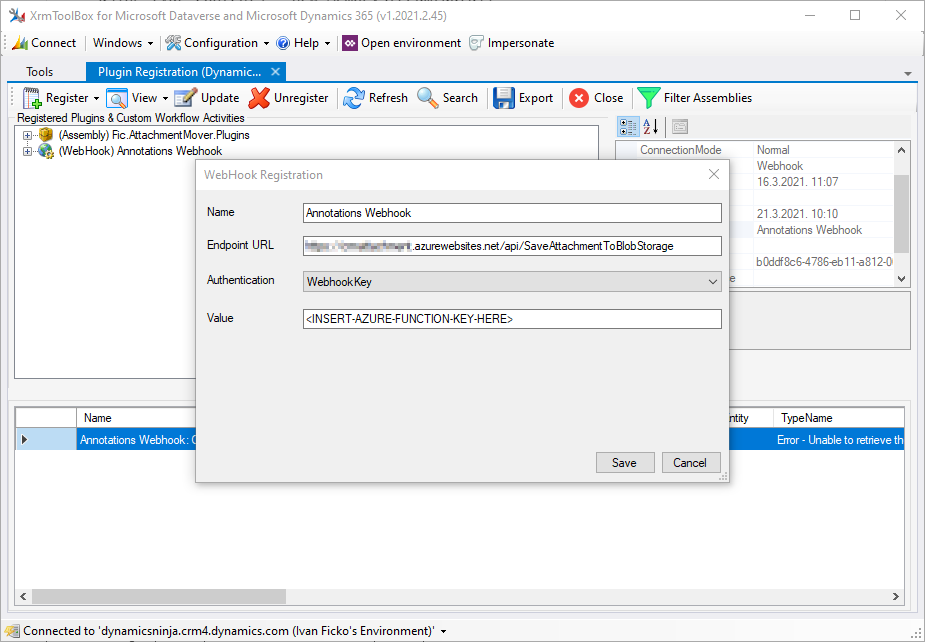

Open the Plugin Registration tool to start registering the webhook. Go to Register -> Register New Web Hook.

Populate the URL for your function and copy the Function Key from the Azure portal.

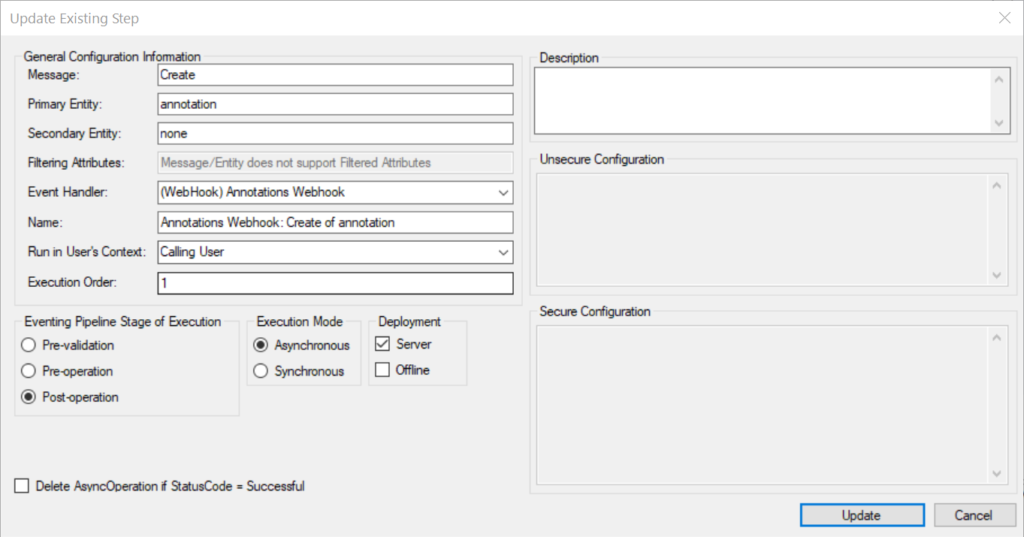

Next one up is to register the Create step for this webhook.

The step should be created as async one because that way we will not wait for the document upload every time we add a new file to the Dataverse notes. The key thing here is to set the message to Create and the primary entity to annotation.

After we created the webhook step we are ready to upload all files to the DMS every time a new file is added to the Dataverse notes entity.

Next step is to handle retrieving that file every time user initiates the download form the timeline.

Downloading attachments

When attachments are stored in the DMS we will not have document content stored in Dataverse, but we will have a reference that will be used to retrieve it from the DMS in real-time.

We need to register the Retrieve plugin which will retrieve files from DMS every time user initiates the download from the timeline.

If the upload is still not initiated file will be retrieved form the Dataverse, but if upload was completed successfully file will be retrieved from the DMS in real time.

Download Implementation

First we need to implement the Azure function that will download the file form the Blob storage for us.

[FunctionName("DownloadFileFromBlobStorage")]

public static async Tas Run([HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)]HttpRequestMessage req, TraceWriter log)

{

try

{

var id = req.GetQueryNameValuePairs().FirstOrDefault(q => string.Compare(q.Key, "id", true) == 0).Value;

var base64 = BlobStorageHelper.DownloadFile(new Guid(id));

var resp = new HttpResponseMessage(HttpStatusCode.OK)

{

Content = new StringContent(base64, System.Text.Encoding.UTF8, "text/plain")

};

return resp;

}

catch (Exception ex)

{

return req.CreateResponse(HttpStatusCode.InternalServerError, ex.Message);

}

}

We will send Dataverse ID as the input parameter to the function so we can browse the Blob storage and retrieve the right file.

Custom method called DownladFile will help us to get the file from the blob.

public static string DownloadFile(Guid fileId)

{

var storageAccount = CloudStorageAccount.Parse(ConnectionString);

var blobClient = storageAccount.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("attachments");

var blockBlob = container.GetBlockBlobReference(fileId.ToString("D"));

byte[] bytes;

using (var ms = new MemoryStream())

{

if (blockBlob.Exists())

{

blockBlob.DownloadToStream(ms);

}

bytes = ms.ToArray();

}

var base64 = Convert.ToBase64String(bytes);

return base64;

}

That method will return a simple base64 string because it's all we need to get the file content, everything else is still stored in the Dataverse in note entity.

Now when we got our function ready all we need to do is create a plugin that will be executed on Retrieve message.

public class AnnotationRetrievePlugin : IPlugin

{

public void Execute(IServiceProvider serviceProvider)

{

var context = (IPluginExecutionContext)serviceProvider.GetService(typeof(IPluginExecutionContext));

var entity = (Entity)context.OutputParameters["BusinessEntity"];

var documentBody = entity.Contains("documentbody") ? (string)entity["documentbody"] : "";

if (documentBody != "") { return; }

var client = new HttpClient();

var responseMessage = client.GetAsync($"https://.azurewebsites.net/api/DownloadFileFromBlobStorage?code=iF8V9cdmVGxW022gxlDWr038pNxy/rPUatcbHfO5Z1F5FcHJIlnMng==&id={entity.Id:D}").Result;

var jsonResponseString = responseMessage.Content.ReadAsStringAsync().Result;

if (jsonResponseString != "") { entity["documentbody"] = jsonResponseString; }

context.OutputParameters["BusinessEntity"] = entity;

}

}

Retrieve plugin is quite simple in this case. Basically all it does is that it checks if documentbody field is empty and if it is it calls the function with the annotation ID to get the file. After that it just sets the documentbody field to the value retrieved from the blob.

That way every time retrieve message is executed it will replace the document body with the value form the DMS.

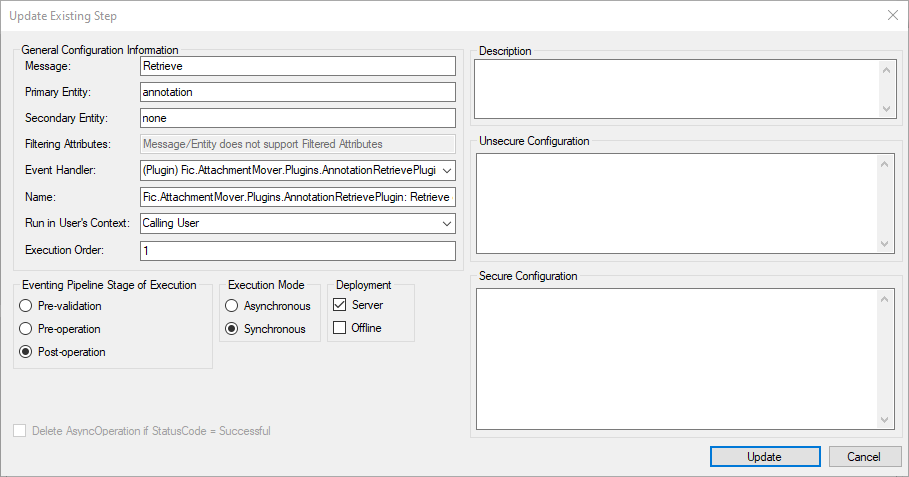

Now we got everything to set the last step and that is registering the plugin.

Open Plugin Registration Tool once again and this time register step on the AnnotationRetrievePlugin.

Set message to Retrieve and primary entity to annotation. Execution mode should be sync this time because we want to get the file straight away.

When this is set we can finally try the whole solution and see if it's working.

Conclusion

This way you can easily move attachments to the DMS system without changing the UX for users in the model-driven app. The downside of this approach is that you of course need to have some fallback mechanism that will collect all the errors that failed to finish the upload files to the DMS, but luckily this will not affect a lot of files from my experience.

I know that that was a big issue in the past since file storage was pricy in the Dataverse, but nowadays some clients still don't want to store the attachments in the Dataverse so I use this approach to achieve their needs.

Feel free to comment how you handle those kind of scenarios so we can maybe improve this process a bit, if not than I hope that you will find this useful.